Graduate Coursework

Capstone Projects, Labs, Writeups

16.782 : Planning and Decision Making in Robotics (Fall 2023)

Objective:

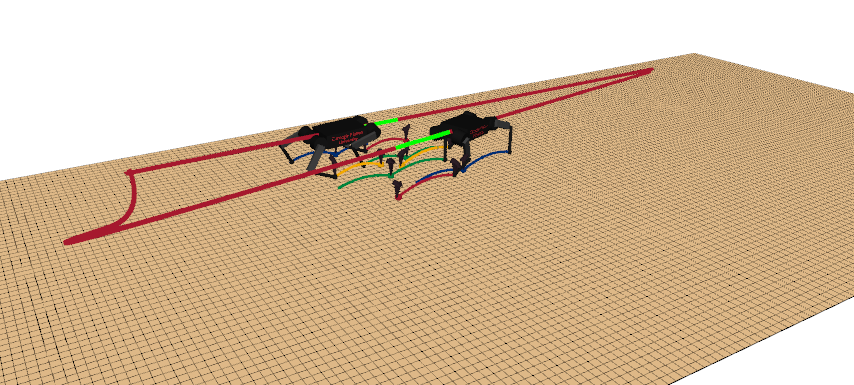

As a student in 16.782, my team and I decided to tackle the challenge of motion planning for

multiple robotic agents in complex environments. Especially as robotics continues to expand into

areas like search and rescue, exploration, and warehousing, the desire for autonomous robots capable

of performing collision free coordinated tasks has noticeably escalated. Given my current research

in quadruped robots and the unique mobility the platform provides, I decided it would be interesting

to employ a variety of multi-agent planning strategies to see which performed best.

Solution:

To implement each planner, we leveraged Quad-SDK, my lab’s ROS-based framework for quadrupedal

locomotion. By incorporating its suite of visualization tools, local footstep planner, and low-level

motor controller, we were able to seamlessly integrate and test our planner performance on a variety

of terrain. For each sampling-based planner, the representation of discrete time robot states is

defined as a vector of robot body position, orientation and velocity. For the body of the project,

my team and I explored three main motion planning algorithms.

- Sequential RRT-Connect (Rapidly Exploring Random Trees)

- Joint Space RRT-Connect

- Conflict Based Search

Results:

We demonstrated the success of each method using simulation tests conducted in Gazebo and Rviz. For validity, the number of agents was set to two, but later extended to four to see how each method scales. In each scenario, we show that the planner can compute kino-dynamically feasible, collision free body trajectories for multiple robots over a number of randomly generated trial environments. The results are shown in the table below.

| Planner | Average Path Length (m) | Average Planning Time (s) | Success Rate |

|---|---|---|---|

| Sequential | 18.2 | 0.094 | 69% |

| Joint | 16.9 | 1.067 | 81% |

| Conflict Based Search | 15.1 | 0.254 | 100% |

The results demonstrate the importance of application specific planners, particularly for implementations where tradeoffs can be made on speed, computational complexity, completeness, and efficiency. CBS remains the most versatile approach, but the overall project highlights the continued need for scalable solutions to real world multi-agent motion planning.

Applied Skills: C++, ROS, Gazebo, RRT, Conflict Based Search, Multi-Robot Motion Planning

16.745 : Optimal Control and Reinforcement Learning (Spring 2023)

In this paper we show a trajectory planning technique that mimics a monkey bar robot swinging from bar to bar. Using a hybrid system direct collocation (DIRCOL) trajectory optimization, we successfully demonstrate the robot swinging up from a dead hang to catch the first bar and swing to the subsequent bars. This DIRCOL technique was tested on various mass distributions in the robot as well as different bar separation distances to understand the behavior with varying parameters. In addition, we show the importance of a free time setup on the cost function in producing consistent feasible trajectories using this DIRCOL technique.

Applied Skills: Julia, MeshCat, DIRCOL, Hybrid Systems, Lagrangian Dynamics, iPOPT Trajectory Optimization

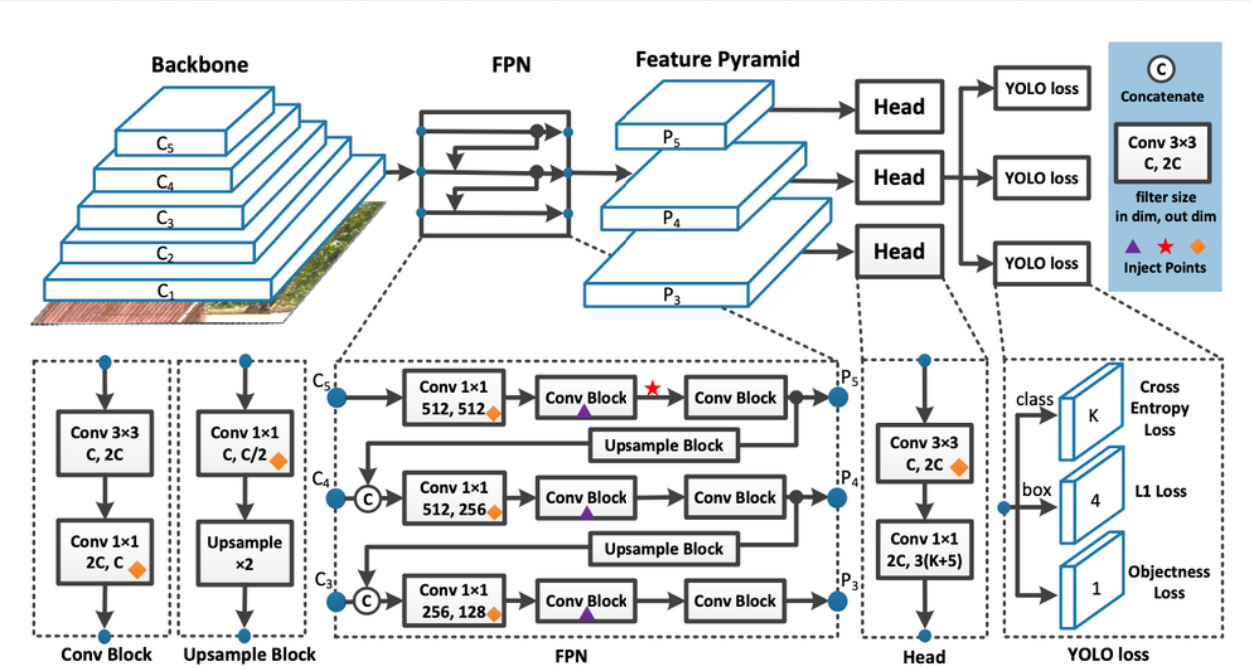

11.785 : Introduction to Deep Learning (Spring 2023)

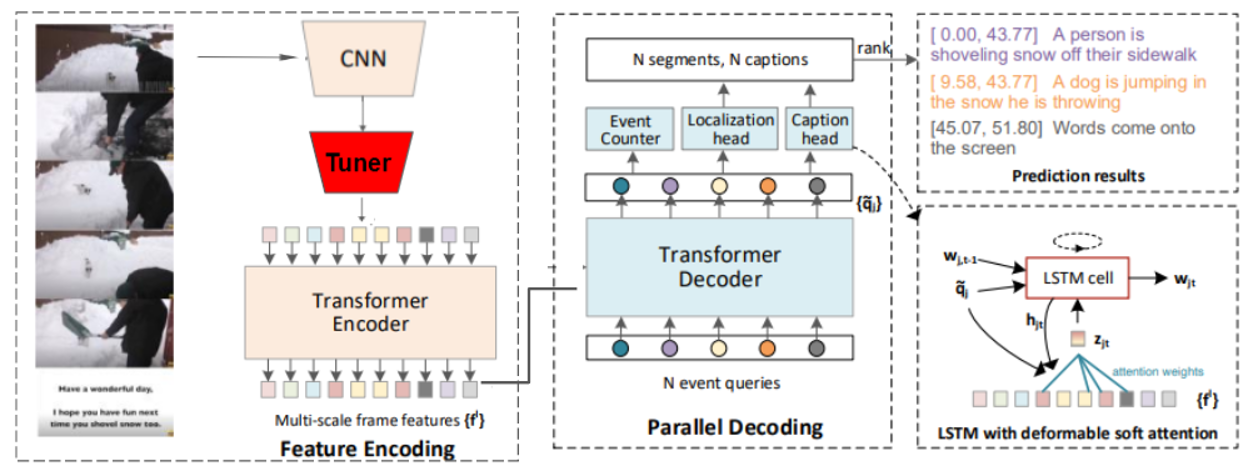

In recent years, there has been a large research focus on dense video captioning.

Video captioning has applications in many fields such as autonomous driving,

video surveillance and creating captions for those with visual impairments. As

such, a novel approach is proposed by employing language models (LM) to help to

semantically align video features in an attempt to improve the overall performance

of dense video captioning. The baseline model for comparison, End-to-end dense

video captioning with parallel decoding (PDVC) [1], produced strong results

compared to many state of the art video captioning frameworks. PDVC is trained

on the ActivityNet and YouCook2 datasets but for this study only YouCook2

was used due to computational capacity limits. We propose the incorporation

of semantic alignment through the addition of a tuner network before the video

features are passed through the PDVC framework. Ablations were ran for different

tuner architectures and overall, the modified PDVC framework outperformed the

baseline PDVC in many evaluation metrics. Promising future extensions with

Semantic alignment and Dense Video Captioning remain with its application to

larger and more comprehensive data sets.

Applied Skills: PyTorch, Linux, GCP, Python, Deep Learning

24.678 : Computer Vision for Engineers (Fall 2022)

Objective:

While taking CMU’s 24.678, my team and I decided to apply traditional computer vision techniques to

tackle the growing incidence rates of fatal injuries on construction sites nationwide. As the number

of

such injuries has risen by nearly 90% in the last three years, my team devised a method to improve

worker awareness and safety on jobsites, maximizing company profits and reducing worker

downtime.

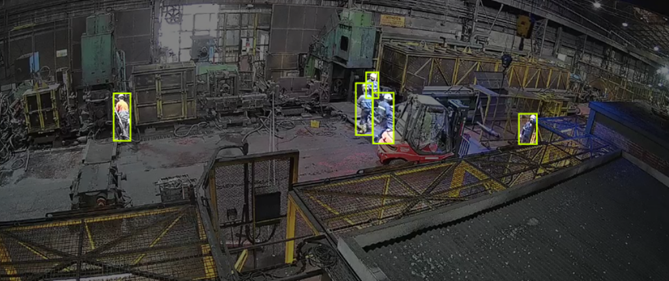

Solution:

My team developed a system that combines image processing and point cloud-based computer vision

methods to track the movement of workers around construction sites and notify them when they enter

potentially dangerous regions. For simplicity, we targeted the most common construction site hazard,

workers being struck by falling objects. Ideally, we’d like this technology to allow us to prevent

some of the accidents we see every year.

To solve this problem, we propose a multistep method. First, we preprocess our worksite by

selecting four fixed reference points in the camera’s field of view. Given a video stream of the

worksite, we need a reliable way to make worker positions in the image to worker positions in space.

To do this, we operate under the assumption that the camera position is fixed, and all workers are

on the same plane (the ground). This allows us to generate a static transformation that maps pixel

values to world coordinate values (assuming the worksite shape and dimensions are known). This

allows

us to account for the unique perspective of our camera and generate the respective transformation

matrix.

Results:

For a quick representation of worker position, we plotted their location from a birds-eye view, In the future, we plan to generate a more comprehensive representation of worker position, perhaps incorporating velocities or making predictions about future worker behavior. Extending the method to work with multiple cameras and occlusions also remains future work. Ultimately, we were able to successfully track the location of each worker in a variety of environments.

Applied Skills: OpenCV, Python, Coordinate Transformations, Object Detection, Transfer Learning

24.695 : Modern Control Theory (Fall 2022)

Objective:

As a student in 24.695, CMU’s Control Theory class, I was assigned the challenge of creating an

efficient controller and estimator for a small self-driving vehicle. Given the projected expansion

of the driverless car industry to reach 93 billion by 2028, this project is geared towards

addressing that growth. For this project, we drew inspiration from CMU’s yearly buggy competition

for our track design and developed an optimal controller for the car.

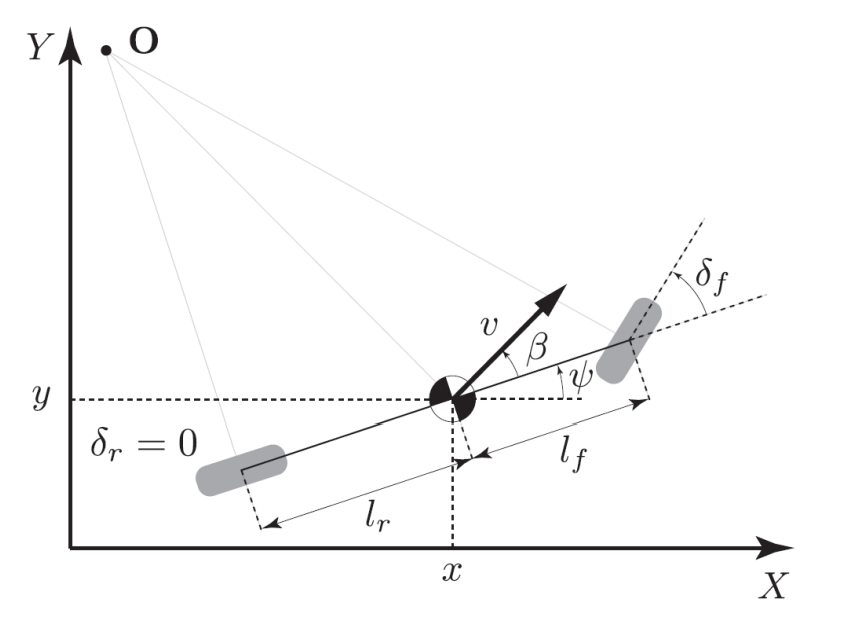

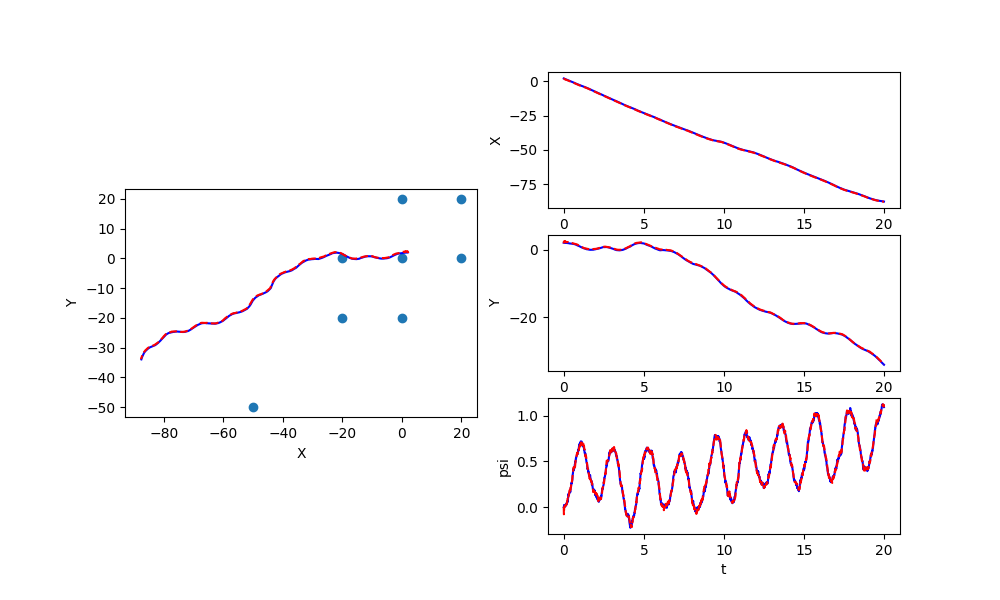

Solution:

To approximate the motion of the car, a simple bicycle model was used to define system dynamics. The

car is modeled as a two wheeled vehicle with two degrees of freedom described by its longitudinal

and lateral dynamics. As such, I designed a two-part controller that generates control commands

including desired steering angle δ and longitudinal force f.

Given a desired trajectory of waypoints, I implemented a:

To approximate the motion of the car, a simple bicycle model was used to define system dynamics. The

car is modeled as a two wheeled vehicle with two degrees of freedom described by its longitudinal

and lateral dynamics. As such, I designed a two-part controller that generates control commands

including desired steering angle δ and longitudinal force f.

Given a desired trajectory of waypoints, I implemented a:

- PID Controller

- State Feedback Controller

- LQR Controller

- MPC Controller

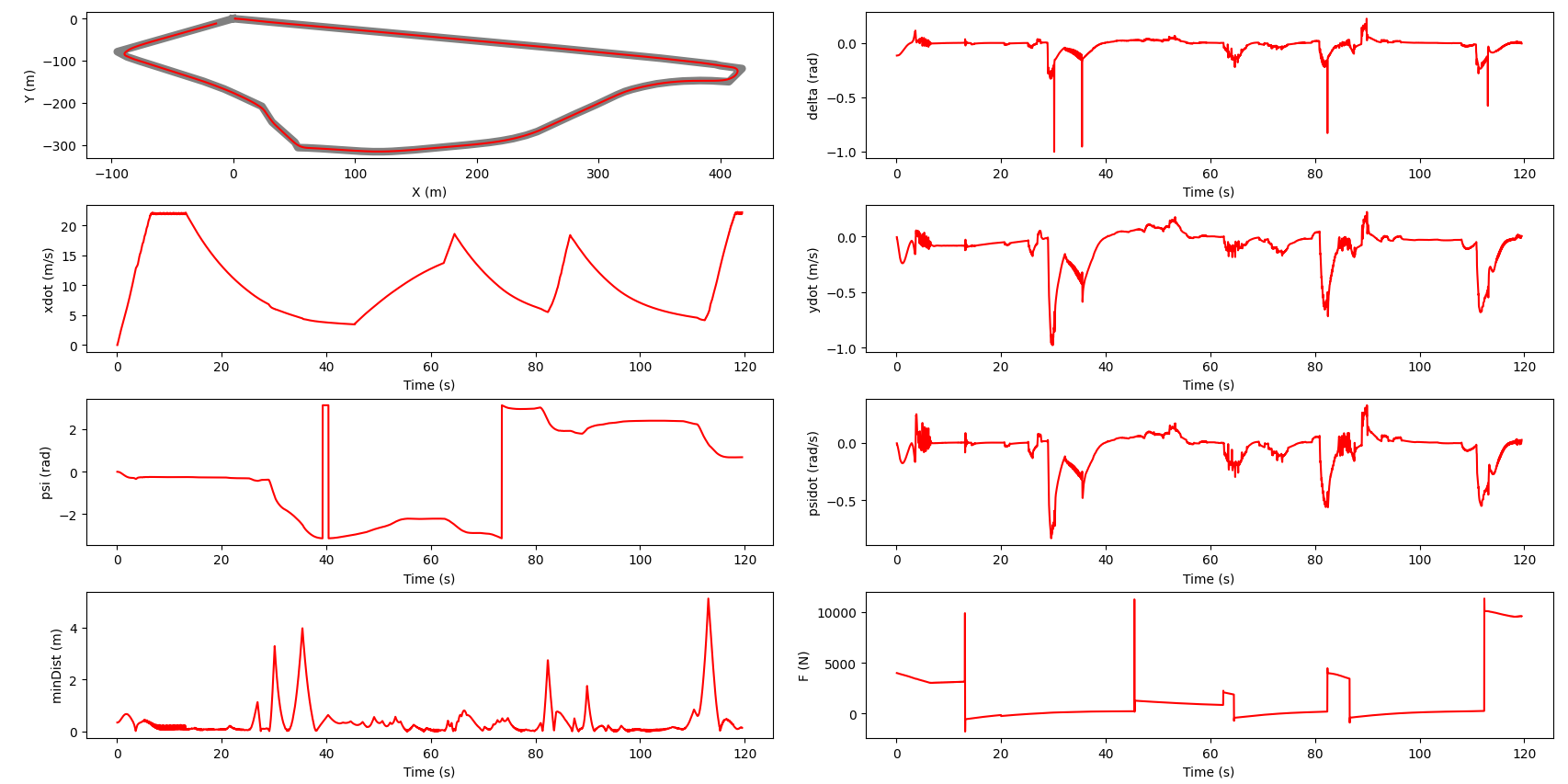

Results:

To assess the performance of our simulation, we tested the model on a track modeled after CMU’s buggy course. Driving simulations were then performed using Webots software. The model was able to complete the track in under 120 seconds and had an average deviation of less than 3 meters from the optimal tracked path.

Applied Skills: Python, Webots, Extended Kalman Filter SLAM, MPC, LQR, State Feedback, PID